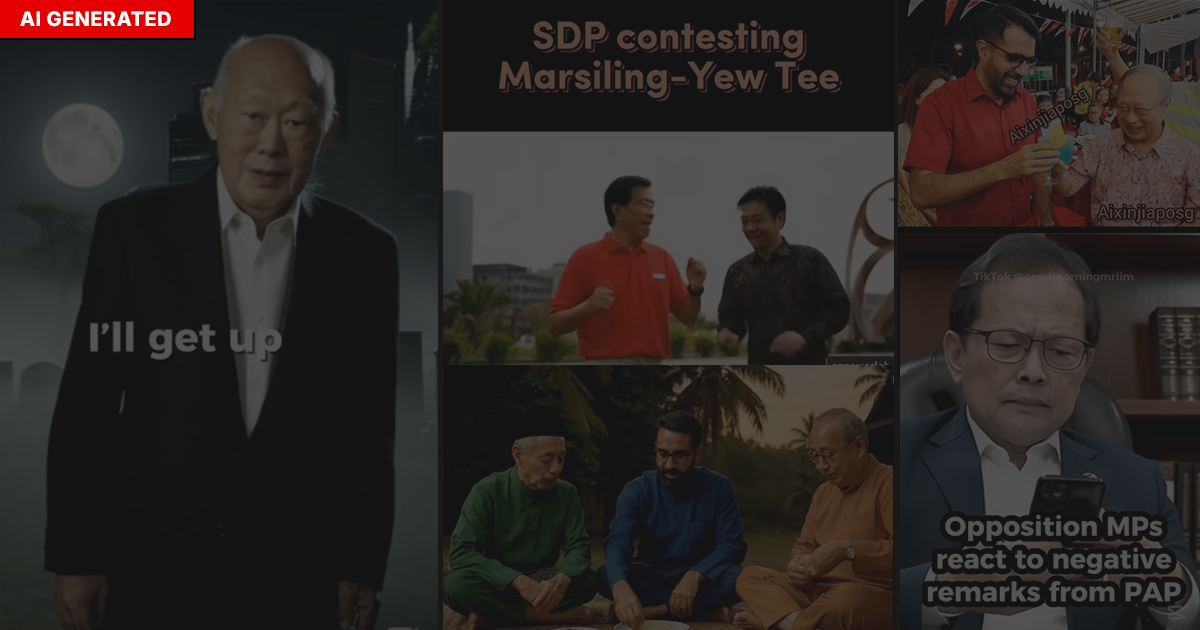

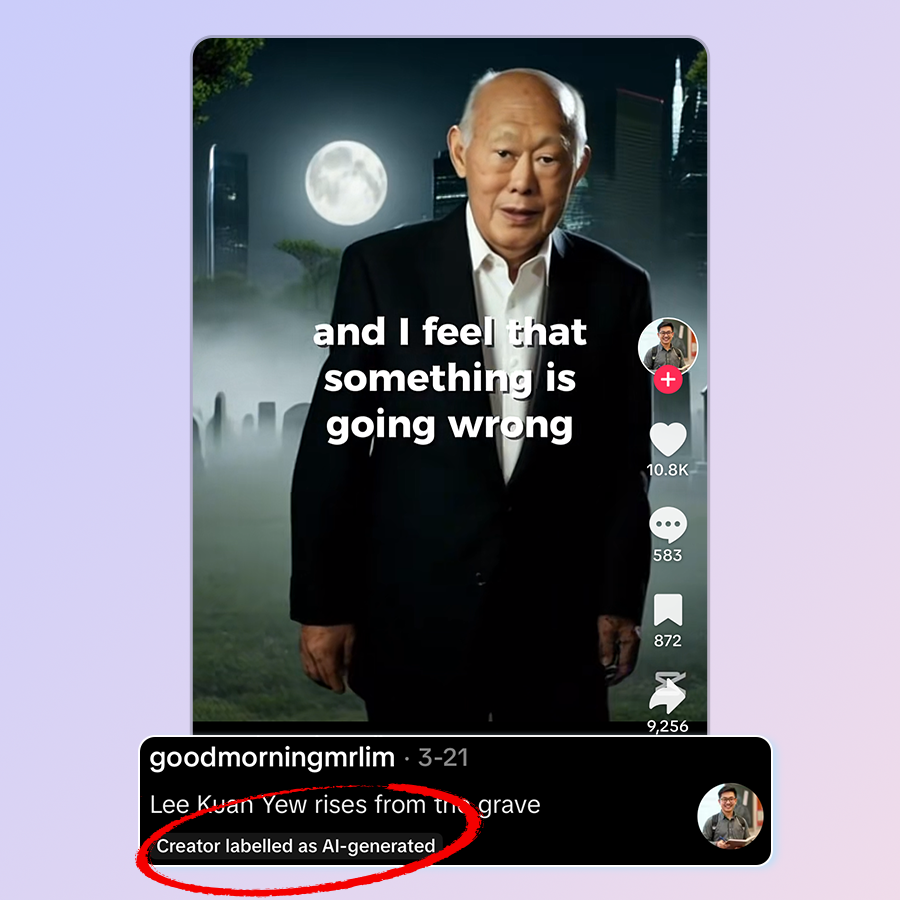

This TikTok video of Singapore’s late founding prime minister Lee Kuan Yew, for example, has received 176,700 views as of the afternoon of Apr 9.

It was created by @goodmorningmrlim on Mar 21 this year and has a “Creator labelled as AI-generated” label on it.

Meanwhile, this TikTok clip of Singapore Democratic Party (SDP) chief Chee Soon Juan appearing to weep, accompanied by the caption “No more fight at Bukit Batok SMC. Where is Chee Soon Juan suppose to go for GE2025?”, doesn’t have the abovementioned label.

The creator, @aixinjiaposg, included “AI generated content” in its bio instead. This video, created on Mar 13, has been seen 24,300 times as of the afternoon of Apr 9.

These videos come amid Singapore’s leaders warning against deepfakes created about them.

Prime Minister Lawrence Wong cautioned in March about deepfake videos and images of him selling products and services like cryptocurrency and Permanent Resident application services. Senior Minister Lee Hsien Loong, too, warned about malicious deepfake videos of him commenting on international relations and foreign leaders in June last year.

More recently, a TikTok account @ahboon87a created a series of Chinese language videos using Lee Kuan Yew’s death as its opening before launching into hot-button issues such as immigration, housing and transportation and questioning whether Singapore has achieved what its leaders previously promised.

The tone of @ahboon87a’s videos appears negative towards the government.

These videos, possibly put together using publicly available images and AI-generated content as well as text-to-speech software, were progressively published between Apr 6 and 7.

The two most-viewed clips have garnered over 80,800 and 49,300 views as of the afternoon of Apr 9, with hundreds more in comments and reactions. The videos in question focused heavily on pitting foreign labour and immigrants against the plight of Singaporeans.

The account’s first post was on Nov 30, 2023, but its next post was only published more than a year later in early April 2025.

Why worry about this now?

Amid the rapid advancements of generative AI, deepfakes have become a feature of elections around the world in the past year or so – including during the United States Presidential Election, as well as the elections in India and Indonesia.

Mr Benjamin Ang, a senior fellow at Singapore’s S Rajaratnam School of International Studies (RSIS) think-tank, told CNA that publicly available statistics suggest the number of deepfakes grew more than 10 times between 2022 and 2023, then another 10 times between 2023 and 2025.

It is no different in Singapore, except that its government has taken pre-emptive moves to curb its impact ahead of a General Election, which must be held by November this year.

With the recent release of the new electoral boundaries, political parties have ramped up their activities under the expectation that the polls will be held sooner rather than later.

But in tandem with the rise of election news and chatter, deepfakes and other digitally manipulated content of political parties and candidates are also on the rise.

Academic Dr Carol Soon told CNA that while the exact impact of such content on election outcomes cannot be determined, there are real-world ramifications. For instance, falsehoods “pollute the information ecosystem” and increase people’s confusion.

As a result, they do not know what and whom to trust.

Dr Carol Soon Associate Professor, National University of Singapore’s Department of Communications and New Media

She added that while some AI content is created for entertainment or humour, there’s a possibility of confusing people. That’s why there is merit to requiring people to label such content even if the intent behind creating these is benign, she added.

“This will help bolster people’s immunity to deepfakes by sensitising them to different types of manipulated content,” Dr Soon said.

Another academic, law professor Eugene Tan, pointed out that the barriers to entry to create such content is lower.

There is also little regard for proper fact-checking, said the associate professor of law at the Singapore Management University’s Yong Pung How School of Law.

At the same time, such content consumes precious resources.

Dr Soon said the media, government and political candidates in response would have to spend time and money debunking falsehoods when these can be used to educate the public on what needs to be done to solve society’s problems.

What do politicians think?

One of the politicians featured in some of the manipulated videos seen online is People’s Power Party (PPP) chief Goh Meng Seng. In one such video, he was seen with clown makeup on.

He told CNA that he was aware of the existence of such videos and, while he believes in freedom of expression, it is “regrettable” that a small minority of people have displayed “immature mischief” in their expression of views.

Mr Goh said he was “not that (concerned) about such videos having any significant negative impact on myself or my party”.

He added he will only take “firm actions” if there is any gross misrepresentation or defamatory content in these AI-generated videos.

The ruling People’s Action Party (PAP), too, have seen several of their members, including PM Wong and ministers Chan Chun Sing, Ong Ye Kung and K Shanmugam, appearing in such manipulated content.

When asked, a party spokesperson said online misinformation and AI-powered deepfakes is a concern for countries everywhere, including Singapore.

“This is an issue that affects everyone, not just politicians,” the spokesperson added.

The Progress Singapore Party (PSP) declined comment for this story, while The Workers’ Party did not respond to queries.

Measures in place

Authorities in Singapore, anticipating such a scenario, had passed a law last October to address this. The Elections (Integrity of Online Advertising) (Amendment) Bill prohibits the publication of digitally generated or manipulated content during elections that realistically depicts a candidate saying or doing something that they did not say or do.

The law will apply to online election advertising that depicts people who are running as candidates, and comes into effect once the writ of election is issued and until the close of polling.

Under the law, the Returning Officer – who oversees the election – can issue corrective directions to individuals who publish such content, social media services, and internet access service providers to take down offending content, or to disable access by Singapore users to such content during the election period.

Social media services that fail to comply face a fine of up to S$1 million upon conviction. For all others, non-compliance may result in a fine of up to S$1,000, imprisonment of up to 12 months, or both.

Candidates can make a request to the Returning Officer to review content that may breach the prohibition and issue corrective directions. If assessed to be a genuine case, corrective directions can be issued.

However, candidates who knowingly make a false or misleading declaration may be fined or may lose their seat in the election.

But what about content that is posted before this law kicks in?

When asked, the Ministry of Digital Development and Information (MDDI) pointed CNA to what was said during parliamentary debates on the Bill before it was passed.

Specifically, Minister for Digital Development and Information Josephine Teo, in her closing speech at the second reading of the Bill, pointed out that the legislation focuses on a specific category of deepfakes during elections.

View this post on Instagram

Other laws such as the Protection from Online Falsehoods and Manipulation Act (POFMA) and the Online Criminal Harms Act (OCHA) may be used to tackle other forms of deepfakes.

Additionally, Mrs Teo said the Infocomm Media Development Authority (IMDA) will introduce a Code of Practice to deal with digitally manipulated content at all times, beyond election periods. This, she said, would require social media companies to play a larger role to tackle deepfakes given how they shape our online experiences.

The code is expected to be introduced this year, she added.

What are social media companies doing?

On their end, tech giants like Google, Meta and TikTok told CNA they are putting in place measures to address such digitally manipulated content.

TikTok, whose platform hosts many of the AI-generated and altered content highlighted here, said it requires creators to label AI-generated content accordingly and does not allow harmfully misleading AI-generated content of public figures, including content that falsely depicts them endorsing a political view or being endorsed.

For the Singapore General Election, specifically, it said a local elections taskforce composed of multi-disciplinary teams focused on democracy, elections, civil society, and technology, has been set up.

It added that “the work has started” and it is engaging with experts from its regional Safety Advisory Councils, such as NUS’ Natalie Pang and other academics. Dr Pang is head of NUS’ Communications and New Media department.

Similarly, Meta said it had established a team composed of subject matter experts from a wide range of disciplines including election integrity, misinformation, safety, human rights and cybersecurity to monitor and respond to emerging risks.

The team covering the Singapore election includes Singaporean nationals who are deeply familiar with the local context and local laws and regulations, Meta said.

When asked how big this team is, a company spokesperson declined to comment. Meta operates Facebook, Instagram, Threads and chat app WhatsApp.

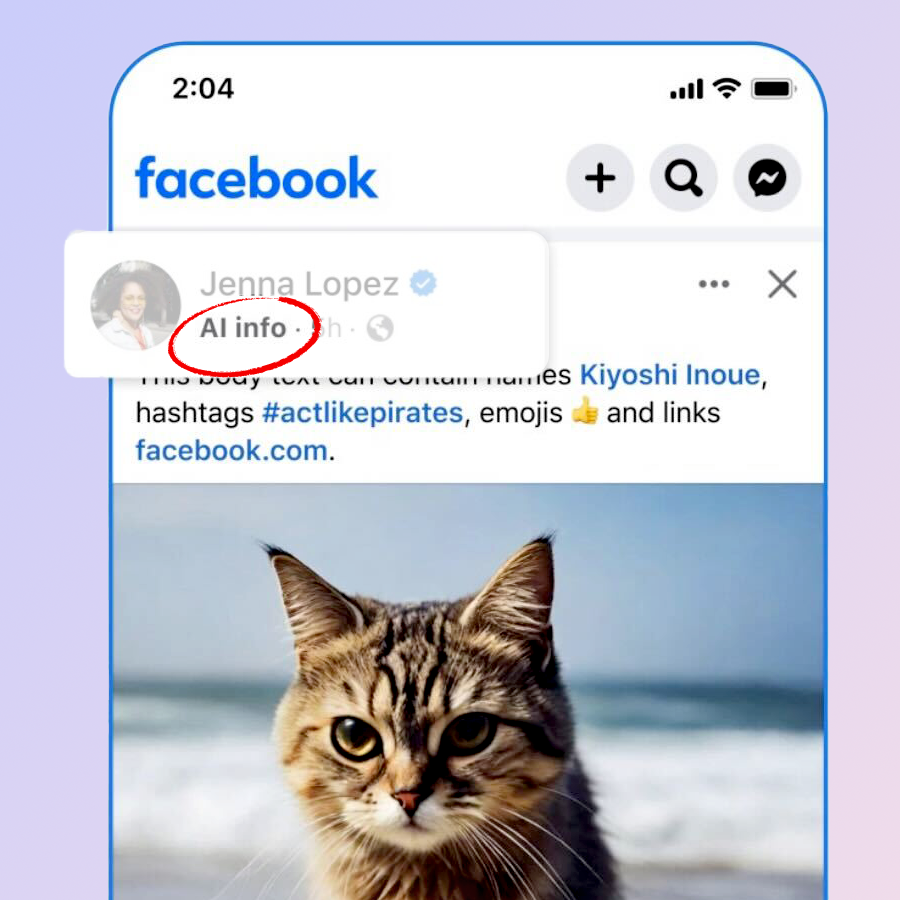

As for AI-generated content, Meta said advertisers are required to disclose when they use AI or other digital methods to create or alter ads about social issues, elections, or politics in certain cases.

The spokesperson told CNA that non-disclosure may result in the ad being removed, and repeated non-disclosures may result in “penalties on the account”.

Meta has also introduced labels for AI-generated content posted on Facebook and Instagram.

It would also remove anything that violates its community standards “as soon as (it) becomes aware” and this applies on all content, including those generated or altered by AI.

Google, meanwhile, said it requires YouTube creators to disclose when they create realistic altered or synthetic content and these would have a label attached. It may also add the label even if the creator did not disclose if the content “could confuse or mislead viewers”.

It added that its misinformation policies “prohibit technically manipulated content that could mislead users and cause significant harm”.

Are these efforts enough?

NUS’ Dr Soon said that the tech giants are looking to mitigate the risks with regard to generative AI and how it’s used.

“However, recent cuts to trust and safety headcount is worrying as it means tech companies will have fewer resources to deal with a growing volume of misinformation and disinformation,” she said.

TikTok had announced the restructuring of its trust and safety team globally in February 2025 while cutting hundreds of content moderation jobs, as it moved towards greater use of AI in this space.

Meta, too, recently dropped its fact-checking programme in the United States and moved to including “community notes” similar to that found on social media site X, formerly known as Twitter.

Assoc Prof Tan echoed this point, saying the starting point is to assume that these platforms “cannot be relied on” to properly counter misinformation and disinformation due to the lack of resources.

The short campaign period of nine days and the cooling off day means there is little time to deal with such content, he added.

Yet given that these tech giants have had great progress in identifying copyright infringement on their platforms, Mr Ang said they should apply the same scale of resources to identifying synthetic content like deepfakes.

As for whether the current legal regime is sufficient to deal with the threat, the SMU professor said it remains to be seen, given that laws such as the Elections (Integrity of Online Advertising) (Amendment) Bill deal with content only after their publication.

They do not have a “preventative or prophylactic capability”, he noted.

“It is likely to be a ‘whack a mole’ approach but this is the reality given the technology’s capabilities,” Assoc Prof Tan said.

“You can’t have a blanket ban on such content as that would be interfering with political communication during a general election.”

Tackling the issue also requires citizens to do their part.

RSIS’ Mr Ang said responding to the threat of disinformation or just plain, old-fashioned lies and rumours requires the “combined forces of laws, fact-checking, media literacy, education, civic consciousness, and personal responsibility to stop and think before sharing”.

The PAP spokesperson, too, encouraged Singaporeans to verify information with trusted sources like its official party website and social media accounts.

“By staying informed and working together, we can ensure a safe digital space, protect our community and uphold our democratic processes,” the spokesperson said.

Assoc Prof Tan similarly exhorted Singaporeans to be discerning in consuming AI-generated or altered content.

Ultimately, if we fall for malicious and false political content, we will be the real losers.

Eugene Tan Associate Professor, Singapore Management University’s Yong Pung How School of Law